Towards a reproducible ETL: an overview of Open Targets' platform inputs

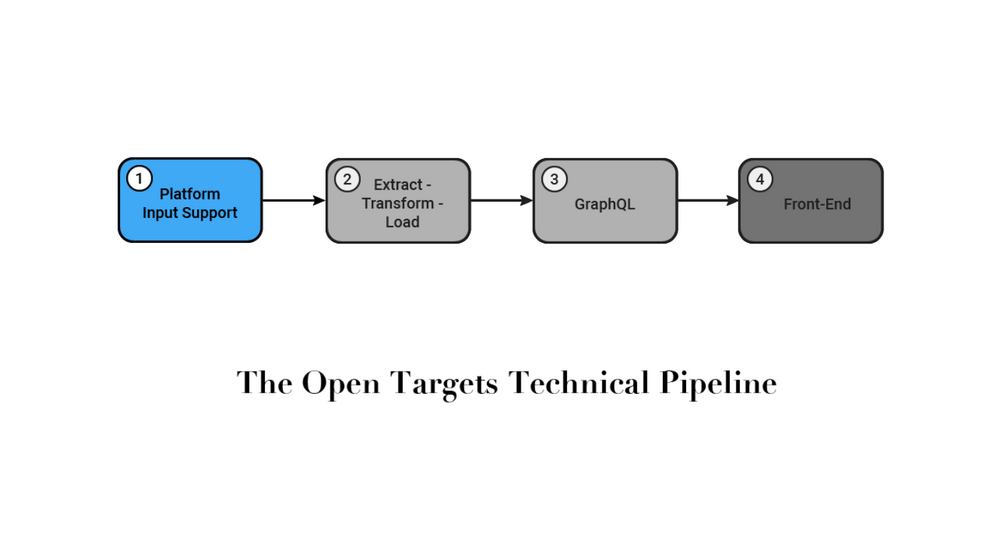

This is the first of a four-part series looking at the codebase that underpins the Open Targets Platform project. The Platform assists with target identification and prioritisation by scoring target-disease associations based on our data sources. In this first installment, we’ll discuss Platform Input Support, our way of ensuring reproducible inputs for our Extract-Transform-Load (ETL) pipeline.

In the past, the various data sources were retrieved from different sources at the time the pipeline was run. The original data and the various manipulations were not stored. Consequently, it was not possible to guarantee the reproducibility of the pipeline itself. This caused problems because data providers could update or change their data between retrievals. As a result the same code run twice potentially yielded different results and it was not clear if errors were caused by changes to our code or data.

To solve this problem, the backend team came up with the idea of writing software to download the datasets, manipulate them and store the output. This way, each time additional processing was required such as by our data team or the ETL pipeline we could reuse the same inputs and know that different outputs were the result of changes that we had made to our codebase.

The basic idea is relatively simple. We conceptualise a resource type as a step, and through a configuration file (yaml) we define which of those steps to run. For each step it is possible to indicate where to download the raw data (either locally or in cloud storage). Some steps undertake limited data transformations to standardise data formats to simplify later processing. As a team we have a preference for using json lines when possible. By reducing the number of formats we deal with, there is less need to write supporting code on other projects.

To give an idea of the number of data sources type we query, they include:

- Download file from Google Cloud: scanning buckets to find the most recent file available

- Download from FTP: using the FTP URI to get the proper file

- Download shared spreadsheet, separated value (csv/tsv) or owl files and manipulating the data to generate JSON output

- Query REST APIs or Elasticsearch instances to JSON output

The main objective during the development of the project was the robustness of the code and the reusability of objects. For instance, the module GoogleBucketResource is used for uploading the files generated to our storage in Google Cloud Platform, and can be parameterised so each step can use the same code.

Further improvements such as extensive tests and CI/CD are planned to be implemented to increase our confidence in the outputs, ease refactoring, and make deployments easier.

If you want to check out the code for yourself, it is a public repository and can be found on Github.

This work underlied the recent release of the next-generation Open Targets Platform. Find out about some of the other changes we made in our release blog post.