Open Targets meets Docker and Luigi

In the latest release of our Open Targets Platform, we have 18 publicly available data sources all integrated in one place to facilitate your drug target identification and prioritisation.

We process this data following a complex and ever changing ETL (Extract, transform, load/) pipeline, which right now is composed of 15 steps.

One of the major requirements for our pipeline is that it should work seamlessly in different environments: we want anyone to easily fork our code and host a private version.

Docker containers make our pipeline portable. Each step of our pipeline is encapsulated in a container, where dependencies are specified and their history tracked with version control.

Besides, the containers allow our current industry partners (GSK, Biogen, Takeda, and Celgene) to re-run our pipeline and augment it with their own private and licensed data.

You can also install your own copy of the public Open Targets Platform, whether you are in industry or academia! Please

get in touch if you want to know more.

Even if all the dependencies of the pipeline code are satisfied by using containers, the various modules of the pipeline had to be triggered manually and in the right order. After each step completed we had to perform quality control.

This meant that building a release cycle with updated versions of our data was a laborious and manual task.

It was also difficult to make changes to the existing code. We had to make sure that any change to the code would not alter the quality of the output data. Ultimately this meant running the entire pipeline, something that takes too long to do for every single change.

This was far from ideal for our team, so we started looking at what we could do internally, and/or evaluate available solutions to circumvent the issues above. We wanted something that:

- allowed each step to live as its own separate module

- could run steps independently, leveraging existing data when available, thus leading to quicker feedback on our code changes

- would allow to write other steps in a different language, if necessary

- wrote to the main backend we use, which is Elasticsearch

- ran in a single machine with no other dependencies than Docker

We are not alone

It is no surprise that there are many workflow managers out there, all created to orchestrate and automate this process across many tech companies.

We met Luigi and liked it a lot.

Luigi is open source, battle tested and written in Python.

We can now spawn up a cheap disposable machine (preemptible or spot) in Google or Amazon cloud, and run the whole pipeline with a single command using Luigi. We can monitor, stop, start and resume the run.

Luigi is also compatible with pretty much any technology we can think of, and out-of-the-box it enabled us to store the state of the ETL pipeline directly in Elasticsearch.

The only downside of Luigi for our workflow is that it has no explicit docker support. Although it would be possible to launch Docker containers in an external process from the shell or in Kubernetes/minikube, none of those scenarios looked optimal for us.

To solve this, we have written and contributed to the main repo a module (runner in Luigi language), which can run any docker container talking directly with the Docker API.

Our module uses the official and Python library for the Docker Engine API to the low-level docker API, which allows us to fine control the volume mounts. Multiple containerised workloads can be scheduled in parallel and orchestrated using Luigi.

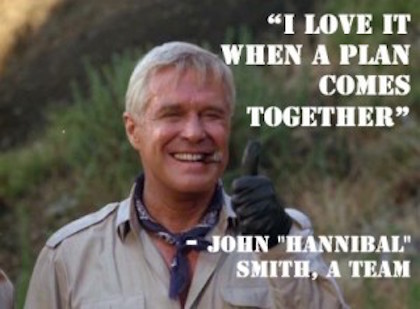

Now we can use Luigi to run our pipeline (which we call Mr.T-arget) on a monthly basis in any architecture that comes with the docker daemon. You can check out an example of how we use our luigi orchestrator, called Hannibal (for obvious reasons).

What does this mean for our users?

They can look forward to more data sources: with automatic and rapid feedback on each of our code changes, it will be easier to add new data sources without breaking the existing pipeline. Also, users will benefit from more frequent updates of our target and diseases associations. We hope this will help our users find better drug targets and remain up to date with the latest evidence.