Provenance Metadata — towards reproducible research

My name is Manuel Bernal Llinares, I'm the back end lead at Open Targets, where I mentor, supervise and work hand in hand with enthusiastic software engineers to bring our flagship target discovery platform to the life sciences community.

In this blog post I introduce to you our next stop in the technical roadmap, which is a step forward on improving our data pipelines and also our FAIRness scoring, a new feature that addresses provenance metadata, reliability and reproducibility in our data collection process.

What is FAIRness?

FAIR stands for Findability, Accessibility, Interoperability, and Reuse. It is good practice to apply these principles to digital assets, now that we are relying more and more on computational support to deal with the increasing amount, complexity, and generation of data.

Find our more at go-fair.org

Data Collection

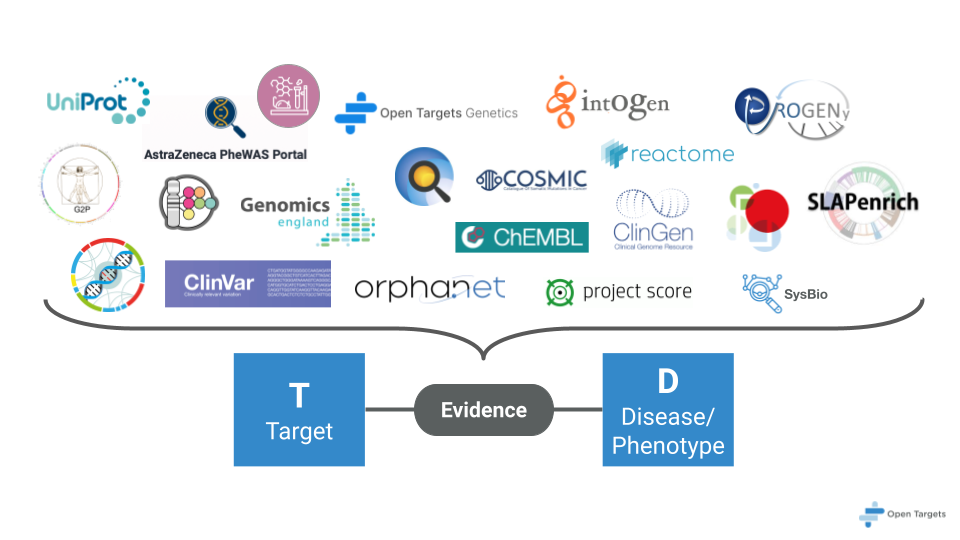

Collecting data from an heterogenous landscape of data providers is a complex and challenging process. In order to do this effectively, it is important to have a reliable, resilient, scalable, and reproducible data collection pipeline.

One of the key challenges of data collection is dealing with the variety of data formats and structures that may be used by different data providers, e.g. file formats, transfer protocols, data repositories (e.g. Elastic Search), etc.

Scalability is another key consideration when collecting data from a heterogeneous landscape of data providers. The data collection pipeline should be designed to handle large volumes of data, and should be able to scale up or down as needed to meet changing demands.

Another challenge of data collection is ensuring that the data is collected in a reliable and resilient way. This means that the data collection pipeline should be designed to handle failures and errors gracefully, so that data can be collected and processed even in the face of unexpected issues or disruptions.

Finally, reproducibility is an important consideration when collecting data for scientific analysis. This means that the data collection pipeline should be well-documented and easily reproducible, so that other researchers can replicate the data collection process and verify the results of the analysis.

Open Targets Platform input data collection process is carried out by our Platform Input Support pipeline.

The different data providers are grouped together in the following logical steps (for more details on our data providers, please refer to our documentation:

- GO: This step collects data from the Gene Ontology (GO) database, which provides a standardized vocabulary to describe genes and their functions.

- Drug: This step collects data on drugs, including information on their targets, indications, and other pharmacological properties.

- Target: This step collects data on the molecular targets of drugs and other compounds, including proteins, nucleic acids, and other biomolecules.

- OpenFDA: This step collects data from the OpenFDA API, which provides access to data from the US Food and Drug Administration (FDA), including adverse events, drug labels, and other information.

- Evidence: This step collects various types of evidence for target-disease associations, including genetic association studies, functional genomics data, and other sources.

- Disease: This step collects data on diseases and their associated genes, including information on disease ontologies, clinical features, and other properties.

- Interactions: This step collects data on protein-protein interactions and other types of molecular interactions, including information on the molecular mechanisms underlying these interactions.

- Literature: This step collects data from the scientific literature, including publications on genetics, genomics, and other areas of research relevant to Open Targets. Data is obtained from Europe PMC, through a data mining and machine learning pipeline.

- Mouse phenotypes: This step collects data on mouse phenotypes, including information on the genetic and environmental factors that influence phenotype expression. Data is obtained from the International Mouse Phenotypes Consortium.

- Homologues: This step collects data on homologous genes, which are genes that share a common ancestry and are often functionally related. Data is obtained from Ensembl.

- SO: This step collects data on sequence ontologies, which provide standardized vocabularies to describe genetic variation and other features of genomic sequences. Data is obtained from Sequence Ontology project.

- Reactome: This step collects data on biological pathways and other types of molecular interactions, including information on the molecular mechanisms underlying these interactions. Data is obtained from the Reactome database.

- Expression: This step collects data on gene expression patterns, including information on tissue-specific expression, developmental expression, and other properties.

All this data, collected from the different Data Providers becomes an integral part of the platform release, contributing to its anatomy in the following way:

<release_name>/

- conf/ -> configuration files

- input/ -> input data collected by PIS

- jars/ -> 'jar' file used for running the data analysis

- output/ -> data analysis products from the ETL

Launched with the February release (23.02), there is an additional product from PIS in the input folder, a Data Collection Manifest, which contains the minimum metadata-set needed for following a file, produced by PIS in the input folder structure, all the way back to the Data Provider Resource that is the origin of that data point.

The Data Collection Manifest is produced in JSON format, at the data release relative location:

input/manifest.json

Its contents serve a multidimensional purpose, and this is reflected in the data model implemented by this new feature.

Manifest Data Model

PIS Session

Running PIS for collecting data is an action that happens within the context of a PIS Session, and this concept is represented in the data model as:

{

"py/object": "manifest.models.ManifestDocument",

"session": "2023-02-06T11:38:40+00:00",

"created": "2023-02-06T11:38:40+00:00",

"modified": "2023-02-06T11:38:40+00:00",

"steps": [],

"status": "NOT_COMPLETED",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "All steps completed their data collection",

"msg_validation": "NOT_SET"

}

session is a unique identifier for the PIS Session that acts as scope for the rest of the metadata in the manifest.

created, this timestamp will state when the PIS Session was first started. PIS allows for full and partial data collection runs, i.e. all steps or only selected ones, this allows us to re-collect data, when needed, from a group of data sources (grouped by the concept of step), as part of the same overall data collection session.

modified, will point to the last time a data collection happened within the scope of a particular session.

Upon completion, there are three attributes that will report on the results of the data collection process itself:

- status_completion, possible values:

- COMPLETED, all the steps that are part of the PIS Session being described were able to collect their data successfully.

- FAILED, at least one step in the session was not able to complete its data collection process.

- status_validation, possible values:

- VALID, the collected data in the session passed all the validation tests.

- INVALID, at least one validation test was not passed by the session's collected data.

- NOT_VALIDATED, no validation tests have been run on the session's collected data.

- msg_completion and msg_validation contain additional information to their corresponding status_ homologue.

- status attribute is not being used yet, and its value should not be taken into consideration.

steps, is a collection of metadata on the different steps that are part of the data collection session.

PIS Step

A PIS Session is a collection of different steps, conceptually and logically grouping together different data resources.

Every step that part of a PIS Session is described via the following data model:

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T13:44:45+00:00",

"modified": "2023-02-06T13:44:45+00:00",

"name": "Disease",

"resources": [],

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

When needed, we may run a data collection step multiple times within the same session and, although we are not interested in detailed historical information, we are interested in whether a step was run multiple times. For this purpose we have:

- created, when the step run for the first time.

- modified, the last time this step was (re-)run.

name is just the step's name, that happens to be unique across the session.

And the status attributes with their msg companions, providing information on completeness and validation of the data that a particular step is meant to collect from our data providers.

All the data collected by PIS is produced as files, used further down our processing pipelines, and organized in a hierarchical way under the input location in the release data.

Every file is a Collected Resource, responsibility of a particular step in PIS, the attribute resources contains metadata for all the Collected Resources in a particular step.

Collected Resource

Every data point produced by a PIS step is a modeled as a Collected Resource, and stored as a file within the input data folder hierarchy, independently of its origin (file, Elastic Search query, database request...)

The following data model is used in the manifest file to describe a Collected Resource:

{

"py/object": "manifest.models.ManifestResource",

"created": "2023-02-06T13:44:45+00:00",

"source_url": "https://github.com/EBISPOT/efo/releases/download/v3.50.0/efo_otar_slim.owl",

"path_destination": "ontology-inputs/ontology-efo.jsonl",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "OWL to JSON + JQ filtering with '.[\"@graph\"][] | select (.[\"@type\"] == (\"Class\",\"Restriction\") and .\"@id\" != null)|.subClassOf=([.subClassOf]|flatten|del(.[]|nulls))|.hasExactSynonym=([.hasExactSynonym]|flatten|del(.[]|nulls))|.hasDbXref=([.hasDbXref]|flatten|del(.[]|nulls))|.inSubset=([.inSubset]|flatten|del(.[]|nulls))|.hasAlternativeId=([.hasAlternativeId]|flatten|del(.[]|nulls))|@json' and some processing via EFO helper to produce this resulting diseases dataset",

"msg_validation": "NOT_SET",

"source_checksums": {

"py/object": "manifest.models.ManifestResourceChecksums",

"md5sum": "NOT_SET",

"sha256sum": "NOT_SET"

},

"destination_checksums": {

"py/object": "manifest.models.ManifestResourceChecksums",

"md5sum": "c54245232d004152c017902d5ba55c93",

"sha256sum": "15194bf3eb5fa9e3783a5cce0d31cd832dce0fecd467be423cc180970f60c65b"

}

}

created states when the resource was collected.

The data file, which path relative to the input folder is provided by path_destination, contains the information collected from the given source_url.

This model contains the usual status attributes with their corresponding msg companions, where, in the example shown above, provides additional information as free text, indicating that the original file was converted from OWL to JSON format, and then its original data manipulated by the given JQ filter.

Checksum information is offered about the produced resource via:

- destination_checksums, provides MD5 and SHA256 hashes of the file produced at path_destination, which may or may not be a copy of the data source at source_url depending on whether or not there were data transformations.

- source_checksums, this information may not always be available, as not all the providers offer checksum information on their data, and it mainly applies to those data sources that are files.

Using the Manifest Data Model

As an example of useful answers to important questions about a data collection process, we provided some JQ based manipulation / filtering statements.

(These examples used our 23.02 release manifest file)

Get a completion summary on the given session manifest

jq 'del(.steps)' manifest.json

Result

{

"py/object": "manifest.models.ManifestDocument",

"session": "2023-02-06T11:38:40+00:00",

"created": "2023-02-06T11:38:40+00:00",

"modified": "2023-02-06T11:38:40+00:00",

"status": "NOT_COMPLETED",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "All steps completed their data collection",

"msg_validation": "NOT_SET"

}

Get the names of the steps in the session

jq '.steps[] | .name' manifest.json

Result

"GO"

"Drug"

"Target"

"OpenFDA"

"Evidence"

"Disease"

"Interactions"

"Literature"

"Mousephenotypes"

"Homologues"

"SO"

"Reactome"

"Expression

Get the steps manifest objects for the given session

If we'd like to have a look at the manifest metadata at step level, we can use this query to filter out resources downloaded by every step

jq '.steps[] | del(.resources)' manifest.json

Result

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T11:38:40+00:00",

"modified": "2023-02-06T11:38:40+00:00",

"name": "GO",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T11:38:41+00:00",

"modified": "2023-02-06T11:38:41+00:00",

"name": "Drug",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T12:17:12+00:00",

"modified": "2023-02-06T12:17:12+00:00",

"name": "Target",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T12:36:05+00:00",

"modified": "2023-02-06T12:36:05+00:00",

"name": "OpenFDA",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its data collection",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T13:44:31+00:00",

"modified": "2023-02-06T13:44:31+00:00",

"name": "Evidence",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T13:44:45+00:00",

"modified": "2023-02-06T13:44:45+00:00",

"name": "Disease",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T13:48:51+00:00",

"modified": "2023-02-06T13:48:51+00:00",

"name": "Interactions",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T13:52:14+00:00",

"modified": "2023-02-06T13:52:14+00:00",

"name": "Literature",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T13:53:03+00:00",

"modified": "2023-02-06T13:53:03+00:00",

"name": "Mousephenotypes",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T13:53:04+00:00",

"modified": "2023-02-06T13:53:04+00:00",

"name": "Homologues",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T15:42:45+00:00",

"modified": "2023-02-06T15:42:45+00:00",

"name": "SO",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T15:42:50+00:00",

"modified": "2023-02-06T15:42:50+00:00",

"name": "Reactome",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

{

"py/object": "manifest.models.ManifestStep",

"created": "2023-02-06T15:42:51+00:00",

"modified": "2023-02-06T15:42:51+00:00",

"name": "Expression",

"status_completion": "COMPLETED",

"status_validation": "NOT_VALIDATED",

"msg_completion": "The step has completed its execution",

"msg_validation": "NOT_SET"

}

Get filenames and checksums for the files downloaded by a particular step

To get the file relative path and its sha256 destination checksum (after downloading the file) for a particular step, e.g. GO, we could use a query like this one

jq '.steps[] | select(.name == "GO") | .resources[] | {path: .path_destination, checksum: .destination_checksums.sha256sum}' manifest.json

Result

{

"path": "gene-ontology-inputs/go.obo",

"checksum": "d02518226c7d29ae42fcdcc1180efd1471021e22fee1b63ea6d00094f366f65f"

}

Show the files produced by a particular step

For sample step target, we could list the produced files with the following query (e.g. for Target)

jq '.steps[] | select(.name == "Target") | .resources[] | .path_destination' manifest.json

Result

"target-inputs/gencode/gencode.gff3.gz"

"target-inputs/homologue/cpd.tsv.gz"

"target-inputs/homologue/crna.tsv.gz"

"target-inputs/go/ensembl.tsv"

"target-inputs/go/goa_human.gaf.gz"

"target-inputs/go/goa_human_rna.gaf.gz"

"target-inputs/go/goa_human_eco.gpa.gz"

"target-inputs/homologue/species_EnsemblVertebrates.txt"

"target-inputs/ncbi/Homo_sapiens.gene_info.gz"

"target-inputs/genenames/hgnc_complete_set.json"

"target-inputs/reactome/Ensembl2Reactome.txt"

"target-inputs/genenames/human_all_hcop_sixteen_column.txt.gz"

"target-inputs/project-scores/gene_identifiers_latest.csv.gz"

"target-inputs/uniprot/uniprot.txt.gz"

"target-inputs/uniprot/uniprot-ssl.tsv.gz"

"target-inputs/hpa/subcellular_locations_ssl.tsv"

"target-inputs/hallmarks/cosmic-hallmarks.tsv.gz"

"target-inputs/tep/tep.json.gz"

"target-inputs/chemicalprobes/chemicalprobes.json.gz"

"target-inputs/safety/safetyLiabilities.json.gz"

"target-inputs/tractability/tractability.tsv"

"target-inputs/project-scores/04_binaryDepScores.tsv"

"target-inputs/ensembl/homo_sapiens.jsonl"

"target-inputs/hpa/subcellular_location.tsv.gz"

"target-inputs/gnomad/gnomad_lof_by_gene.txt.gz"

List all the files produced by PIS in the given session

jq '.steps[] | .resources[] | .path_destination' manifest.json

(results from this query are omitted)

And count them

jq '.steps[] | .resources[] | .path_destination' manifest.json | wc -l

Result

1424

Get the SHA256 checksums for all the files produced by PIS in the given session

jq '.steps[] | .resources[] | {path: .path_destination, checksum: .destination_checksums.sha256sum}' manifest.json

Result (partial as the complete listing is too bigger than useful for the purposes of this article)

{

"path": "so-inputs/so.json",

"checksum": "16157b962e35704ff73bf35280ea6c3bbe1d4a68537364a8bfee6d6dc166b0ab"

}

{

"path": "reactome-inputs/ReactomePathways.txt",

"checksum": "793c456e6742fa27d37a61910ac54baea813586f908818c4f84bd44e56b4ca24"

}

{

"path": "reactome-inputs/ReactomePathwaysRelation.txt",

"checksum": "8a80c00688e05a243f350647c2dcb8fa9b0523716c26180748cf48fc18f798a7"

}

{

"path": "expression-inputs/expression_hierarchy_curation.tsv",

"checksum": "b124338ed798cd28a70393c1e986aa5440757ef7d8c073c76b0f6c9c58c7d53a"

}

{

"path": "expression-inputs/baseline_expression_binned.tsv",

"checksum": "e53f8cb458b6529760ffea5f489b8441873731d8e56860bf862f33c6e56f5eba"

}

{

"path": "expression-inputs/baseline_expression_counts.tsv",

"checksum": "385a9f580fa3ef52ccb40e22efffe765012a4a26510a452a9e4f7d775a6e75e3"

}

Get provenance information for every PIS product

We can use the following query

jq '.steps[] | .resources[] | {source: .source_url, path: .path_destination, checksum: .destination_checksums.sha256sum}' manifest.json

Result (partial as the complete listing is too bigger than useful for the purposes of this article)

{

"source": "https://reactome.org/download/current/ReactomePathwaysRelation.txt",

"path": "reactome-inputs/ReactomePathwaysRelation.txt",

"checksum": "8a80c00688e05a243f350647c2dcb8fa9b0523716c26180748cf48fc18f798a7"

}

{

"source": "https://raw.githubusercontent.com/opentargets/expression_hierarchy/master/process/curation.tsv",

"path": "expression-inputs/expression_hierarchy_curation.tsv",

"checksum": "b124338ed798cd28a70393c1e986aa5440757ef7d8c073c76b0f6c9c58c7d53a"

}

{

"source": "gs://atlas_baseline_expression/baseline_expression_binned/expatlas.2020-05-07.baseline.binned.tsv",

"path": "expression-inputs/baseline_expression_binned.tsv",

"checksum": "e53f8cb458b6529760ffea5f489b8441873731d8e56860bf862f33c6e56f5eba"

}

{

"source": "gs://atlas_baseline_expression/baseline_expression_counts/exp_summary_NormCounts_genes_all_tissues_cell_types-2020-05-07.txt",

"path": "expression-inputs/baseline_expression_counts.tsv",

"checksum": "385a9f580fa3ef52ccb40e22efffe765012a4a26510a452a9e4f7d775a6e75e3"

}

{

"source": "gs://atlas_baseline_expression/baseline_expression_zscore_binned/expatlas.2020-05-07.baseline.z-score.binned.tsv",

"path": "expression-inputs/baseline_expression_zscore_binned.tsv",

"checksum": "793b5755a5d9ee5bf719eb3d391dd0b33064b473cdfc4dafd57a5a7c74d46af3"

}

{

"source": "https://raw.githubusercontent.com/opentargets/expression_hierarchy/master/process/map_with_efos.json",

"path": "expression-inputs/tissue-translation-map.json",

"checksum": "70126079b1c71293b4f21812066133da0defbc7f577fd58ddd6fcee0be3ad9af"

}

Final Remarks

The new Manifest Metadata produced by PIS provides a clear and concise way to keep track of the data collection process and its metadata. This model offers a hierarchical structure, where each level of the data model describes a different aspect of the data collection process.

The PIS Session level provides information about the overall process of data collection, PIS Step level offers information about each individual step of the data collection process, and the Collected Resource level provides detailed information about each data point collected by PIS, it includes the source of the data, the destination file (within the input folder file structure), and any additional processing or transformations that may have been performed, as well as checksum information for both source and destination.

Overall, this new Data Collection Manifest feature offers a powerful tool for tracking and understanding the data collection process, making it easier to identify potential issues and ensure the accuracy and completeness of the data collected, leveling up Open Targets FAIRness.